The unexpected harmony of data and music theory

How Brian Eno can teach us a thing or two about data insight generation

This is part 1 in a series of posts on the concepts of music theory and data [organizations | practices | structures | analyses], stemming from a number of conversations with my good friend Adhika Sigit Ramanto. It’s a personal indulgence of mine to extrapolate the theories of one domain to another. Don’t take it too seriously.

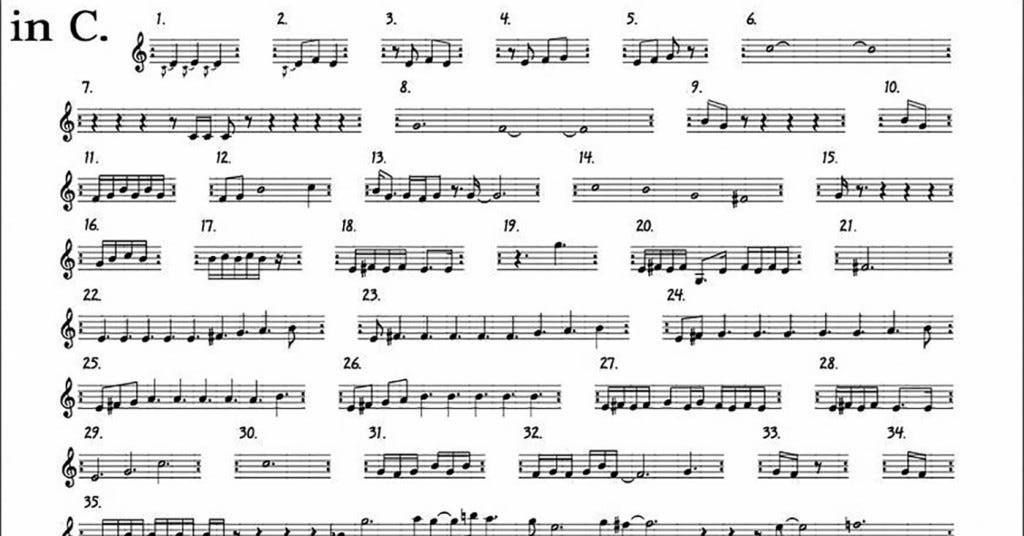

In C

In C is a set of musical fragments composed by Terry Riley in 1964. Each fragment can be thought of as a “phase”. The rules for performing this composition are:

Between 20 - 35 musicians may play the composition together

Each musician plays whatever instrument they choose

Each musician plays at whatever tempo they choose

Each musician plays at whatever volume they choose

Each musician may repeat each phase an arbitrary number of times at their own discretion and volition (e.g. you can play phase 2 for 10 loops while others play 15 loops or move on to phase 3)

The phases must be played starting at #1 and in order, but phases may be skipped by anyone at any time (e.g. one plays phases 1, 3, 7 while another plays phases 1,2,8)

There are a number of accompanying suggestions (e.g. listen very carefully to one another… to occasionally drop out and listen, stay within 2 or 3 patterns of each other) but the intended flexibility and autonomy is obvious. It actually reads a lot like an open source library. There's some base set of instructions, but you're free to apply, mix, and match the underlying components as you wish.

Riley’s In C was the catalyst to concepts in music theory like minimalism and generative music. Generative here meaning “ever-different and changing… created by a system”. To the untrained music theorist, it might seem like this sort of ad hoc, arbitrarily performed ‘composition’ would result in complete cacophony.

But it doesn’t.

Dozens of musicians from Portishead to Brian Eno (who popularized generative music) have played the piece within the confines of the rules and suggestions laid out above. What’s remarkable is that each performance sounds distinctively unique, and perfectly harmonic. (In fact, while writing this article, I accidentally opened two tabs of different performances playing simultaneously — and it sounded great.) Tyler Riley, the expert composer, architected In C with the scalability and extensibility that most platform engineers could only dream of. 🤷

Generative data analysis

The premise of generative music theory is that intentional, well-designed systems are capable of producing diverse, modular, and unique harmonic outputs from any given compatible input. As Brian Eno better describes,

“The point of them was to make music with materials and processes I specified, but in combinations and interactions that I did not.”

There may be less explicit emphasis on “harmony” here than I’m giving credit for, but the concept of raw materials processed by an algorithm to produce unmistakable music should be appealing to any moderately trained data scientist. Generative music theory acts as a set of guardrails, carefully designed by a master conductor, that allows other musicians with little context of the piece to safely play, engage, and create with a guaranteed “at least good" outcome. This is where 1 + 1 = 3. Generative music leverages and extends the expertise of the master composer to any participating musician who, in turn, infuses their own specialized skills, inclinations, and perspective to the interpreted performance.

What if we were to look at this theory through the lens of data organizations and data analytics?

Consider the generative capacity of a company where only the data org produces insights — the upper bound would be constrained by the individual data team’s resources, time, and creativity. And yet, most data teams keep their data tables and tools locked away from the rest of the organization, limiting their access to a set of preconfigured Tableau dashboards.

The musical metaphorical equivalent of this would be restricting a consumer’s music consumption to the pre-recorded songs published on Spotify. You might find initial novelty in a recording, build an understanding and interpretation of the lyrics or the melody, but you’re effectively stuck with consuming rather than inventing and creating. Static dashboards are just that — a handful of metrics and numbers in a set configuration and layout that do not facilitate novel discovery and insight generation.

I’d argue that the most scalable* data organizations have perfected the art of generative data analysis. Effective data teams at large scale companies are forced to act as composers: cleaning and arranging individual data points (notes) into tables and aggregated data sets (scales and chords). These are the raw materials that the company has to work with. As “composers”, the data team has the underlying responsibility of facilitating how and what gets to be “played”, by whom.

Imagine an organization in which dozens of individuals are free to generate their own data analysis and insights, and, to heed Riley’s suggestion, ‘listen very carefully to one another… to occasionally drop out and listen’. You’d have an unrestricted organization that compounds insight generation and information with minimal duplication of work (because you’ll drop out and listen to what everyone else is saying every once in a while). With the right guardrails in place, anyone could be a creator of safe, accurate insight generation.

Defining the Guardrails that Produce Harmony

The most successful organizations engage in a range of operating models. Just as the music from a single artist can be enjoyed live, on Spotify, and via generative formats (remixes, anyone?), data analysis can, and should be, conducted ad hoc (or in live partnership with PMs), consumed as polished dashboards, and in generative formats by non-specific data practitioners.

Proper implementation of generative data theory in an organization requires 3 things:

An expert composer —> An expert data practitioner

A flexible, yet structured composition —> A well-curated data sandbox

Musicians at varying skill levels —> Data-literate business and product experts

A strategic data team is the first non-negotiable. The idea is to turn the functional expertise of the data practitioner into operational and strategic leverage: a data sandbox. The conscientious design, structure, and readability of this sandbox must be flexible enough to accomodate the inputs of our 3rd non-negotiable: the expertise and intuitions of every product manager, designer, engineer, and business partner in the organization. They may not be classically trained data practitioners, but they are experts in their respective fields, similar to our musicians, each of which imparts a certain style and ingenuity to the generative data insight process to produce something meaningful, unique, and personally fulfilling.

Designing a truly generative data organization is more art than science at this level, but here are a handful of principles I might use to guide my strategy:

the data organization must have a strong understanding of the data in the context of the business’s needs (you can’t structure and arrange what you can’t relate)

the data is easy to understand (anybody could take a look at the dataset and accurately describe what the business does)

the data is easy to manipulate (consistent, clear naming conventions and data features makes it hard to mix and match the wrong data)

the data visualization and summaries make it hard to be not-obviously wrong

the organization’s non-data practitioners are still relatively data literate

In previous talks, I’ve likened effective data arrangements as similar to those of effective closet arrangements: choosing to organize a wardrobe by color versus type of clothing typically results in different outfit inspirations. The proximity of customer attribution data columns to their churn propensity score columns to the data of the last merchant they transacted with has a natural influence on the “musician’s” consideration and generation of insights. In practice, the data composers in an organization should be actively testing, rearranging, and reorganizing the data in ways that feel intuitive and evocative for their business counterparts.

The parallels of scalable, creative generative music to data insight generation make it an interesting framework to borrow from. A theory of generative data analysis would articulate the sandbox from which product managers, designers, and business teams could be independently generative with the organization’s data. Instead of data teams being solely responsible for pulling data, insight generation, dashboard building, and discovery, any non-professional data practitioner could enter the well-defined parameters of the sandbox, read the universally defined “sheet music” data, and come up with their own arrangement to produce something truly insightful and meaningful in the context of their unique business goals. That is true data democratization.

*I made the distinction early on that the only data organizations that require generative data analytics are those operating at scale. That's because most companies have yet to even master the art of ad hoc data analysis and reporting. I think generative data analysis is more of an additive, rather than replacement, to basic data services and reporting.